What They Say When She Speaks: Vile Nature of Gendered Hate Speech

- Shreya Raman, Rahmath Rahila Illiyas

On August 19, the Kerala government released a redacted version of the Hema Committee report which exposed the structural violence that exists in the Malayalam film industry. The report, released after a five-year delay, renewed discussions on the routine sexual harassment and labour rights violations that women face in unregulated workplaces like film industries.

The report also gave many survivors the courage to publicly speak about the violence that they have faced – they filed police complaints and gave media interviews detailing the abuse. Post this, many prominent actors and directors have been booked, the Kerala government formed a special investigation team (SIT) and the Kerala High Court constituted a special bench to deal with these cases.

As the legal proceedings and the demand for improved systems continue, so does the violence, especially online. In the wake of the report’s release, Behanbox analysed online reaction to survivors’ statements with an aim to understand the nature of gendered hate speech and trolling that survivors face.

In the first 10 days of September, we shortlisted three Instagram posts and five Youtube videos of media organisations reporting these allegations, in most cases direct interviews of the survivors. We then analysed their comments section.

We found that of the almost 9,000 comments we scrutinised, a majority (46%) were supportive of the survivors, but almost a third (31%) were abusive, mocking or sceptical.

The negative comments ranged from vile abuse to accusations of blackmail, extortion and ‘attention-seeking’ against the survivors. Some criticised the survivors for not speaking sooner and others posted laughing emojis to belittle the women and the gravity of their experiences.

In the last 10 years, internet penetration in India increased from 19% to 52.4% and the number of social media users increased from 106 million to 462 million, making for a third of the population. As internet and social media use becomes more widespread, entrenched patriarchal attitudes of shaming and blaming survivors of sexual assault has moved to online platforms. This has fuelled what is now called tech-facilitated gender based violence or TFGBV.

Gendered hate-speech of the kind we analysed is just one of the many forms of TFGBV, which also includes image-based abuse, blackmailing by threatening to publish sexual information and cyberbullying and cyberstalking. Persons from marginalised communities are more vulnerable to such violence and it has far-reaching impacts on health, safety and political and economic freedom of women and non-binary people.

‘She Should Be Hanged’

The first response to the Hema Committee report by the Association for Malayalam Movie Artists (AMMA), the biggest body for Malayalam actors, was in the form of a press conference led by prominent actor and then general secretary, Siddique. The report criticised AMMA for not taking action on women’s complaints but Siddique said that no one has reported an incident to them.

Next day, a young actor publicly reiterated her accusations that Siddique had raped her in a hotel room. The actor had originally spoken about the abuse in a Facebook post in 2019 and after the report was published, she gave multiple media interviews to speak about the issue and also filed a police complaint against Siddique. The actor has been absconding since the Kerala High Court rejected his anticipatory bail on September 24. He then appealed to the Supreme Court, which granted him interim anticipatory bail. And despite the Kerala Police saying he is not cooperating with the investigation, his interim bail was extended by another two weeks by the Supreme Court on October 22.

A Youtube video of one of the first media interviews that the survivor gave post the report release is among the eight posts that we analysed. Of the 2,908 comments it elicited, 320 (11%) were suspicious of her allegation and around 250 (8.5%) abused or mocked her. Though she had posted about the incident on social media in 2019, 2020 and 2021 she was repeatedly asked in the comments section why she had not spoken earlier or filed a police complaint. Some even accused her of faking the allegations for financial gains.

However, a large part (60%) of the comments were supporting the survivor and 21% were neutral. But other survivors did not receive this kind of support.

When a junior artist said that she was excited to get the phone numbers of famous actors Mammukoya and Sudheesh and speak to them before being made uncomfortable by their sexual advances, she was accused of leading them on and “honey trapping”.

Over 400 (21%) of the 1,880 comments under the Youtube video were negative, most calling her a liar, some commenting that she is not “beautiful enough” to be harassed or stating how “the way she smiled” indicated that she was lying.

Of the three Instagram posts that we analysed, two were about the accusations against popular new-age actor Nivin Pauly. Of the 400-odd comments under the posts, less than 2.5% were supportive, 54% mocked or abused the survivor and 30% were suspicious of the allegations.

One comment said that the survivor should be subjected to a lie-detection test and that if she is lying, “she should be hanged”. Similarly many other comments talked about physical violence, including slapping the survivor and shooting her dead.

Such online abuse, commonly called “trolling” is not a new phenomenon, especially in the Malayalam film industry where fan clubs of popular male actors are an integral part of the film culture. In 2017, when actor Parvathy Thiruvothu called out misogyny in superstar Mammooty’s film Kasaba, the actor’s fans abused her online for weeks, including threatening rape. Two people were arrested after she filed a complaint.

Identity And Compounding Violence

Such gendered hate speech is not just about misogyny, but it overlaps with casteism, racism and other forms of discrimination, said Aindriya Barua, CEO and founder of ShhorAI, a hate-speech detecting algorithm specially designed in code-mixed languages like Hinglish.

“Women from marginalised communities—such as Dalit, Adivasi, Muslim, queer, and disabled women—face compounded forms of violence that are more vicious and persistent. The hate directed at them is often deeply rooted in historical and systemic power imbalances, making their online experience more violent and targeted than that of more privileged women,” they said.

And this is clearly visible in our analysis. When a feminine-presenting man spoke up about how they approached a director for a role and the director made them strip naked and clicked photos, the comment sections were filled with homophobic slurs and insinuations that the survivor had “enjoyed it then and are complaining now” for fame.

Of the more than 2,000 comments under the video, 368 (18%) were mocking or abusive and another 215 (11%) were suspicious of the survivor.

When a Bengali actor accused the same director of inappropriate behaviour, the comments said that because she is Bengali, she “cannot be trusted”, that “a Bengali took away a Malayali’s job” and that she “looks like a drunk lady”. In addition to these targeted comments, there are also comments questioning her for not speaking sooner and like in the case we mentioned earlier, accusations of extortion.

This video only had around 230 comments, of which 10% were abusive or mocking while 16% were suspicious.

“Another disturbing trend I’ve noticed is how much public empathy and response are shaped by the perceived ‘respectability’ of the victim, based on prevailing social hierarchies,” said Aindriya.

Of all the posts that we analysed, the one that got most hate was one where an actor accused former AMMA vice-president and actor Jayan Cherthala of passing an inappropriate comment while shooting a rape scene. According to her, they were shooting the scene in a forest and the actors were sitting behind a rock, when he said that when he looks at her, he feels like raping her.

Nearly 64% of the 1,200 comments were negative with 35% abusive or mocking the female actor. The comments called her an “ammachi”, a derogatory word for an old woman and that she “should not be trusted because she is a b grade actress”. A lot of the comments were just laughing emojis and some said that it was a “joke” and that a “joke has also become torture”.

Lack of Targeted Solutions

Of the 45,000 comments that were used to build the ShhorAI database, 40% were targeted at gender and sexual minorities. To tackle this, a contextual understanding for content moderation is essential, said Aindriya.

“Social media platforms often rely on centralised algorithms that fail to grasp the nuances of hate speech in different languages and cultural contexts,” they added. “Hate speech in India, for instance, is deeply tied to caste, religion, and region-specific identities. A one-size-fits-all approach doesn’t work.”

Aindriya also expressed the need for adequate funding opportunities for initiatives like ShhorAI, especially because existing funding systems are severely skewed towards people from privileged backgrounds.

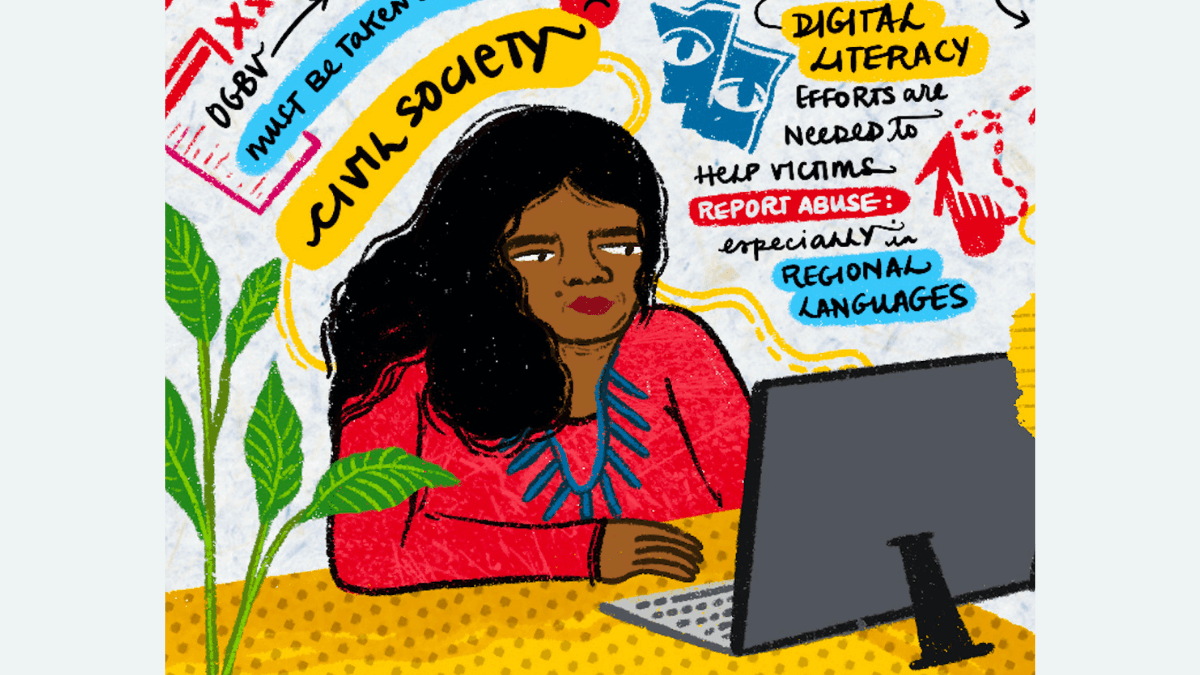

A 2022 International Center for Research on Women (ICRW) report also highlighted this critical gap. “Administrators at these [social media] companies are not equipped to handle reports of violence in languages other than English and are not able to fully comprehend regional idioms of violence. This leads to a non-response to reports in languages other than English, creating a barrier to many people in India who are experiencing abuse,” said the report.

In addition to language and technology-related solutions, the report also advocates for survivor-centric resources and legal support, improved digital literacy and security and addressing social and cultural norms.

Methodology

Between September 1 and 10, we shortlisted 8 social media posts from Instagram and Youtube. Our selection process prioritised video interviews that were first-person accounts of survivors of violence. This was done to ensure that most of the comments were reactions to the accusations. Once these posts were selected, we used a paid version of the Export Comments tool to extract the comments into excel sheets.

The data was cleaned and consolidated to create a larger database. Then we created a list of categories and assigned each comment a category. These categories were:

- Supportive, for comments that provided encouragement and support to the survivor

- Neutral, for comments that had no emotions associated

- Suspicious, for comments that expressed doubt or disbelief about the accusation or the survivor

- Mocking, for comments that mocked the survivor or the accusations. These also included comments that were just a bunch of laughing emojis

- Abusive, for comments that were violent or abusive towards the survivor. These also include threats

Once the data was categorised, we analysed based on the posts and the background of the survivor.

We believe everyone deserves equal access to accurate news. Support from our readers enables us to keep our journalism open and free for everyone, all over the world.